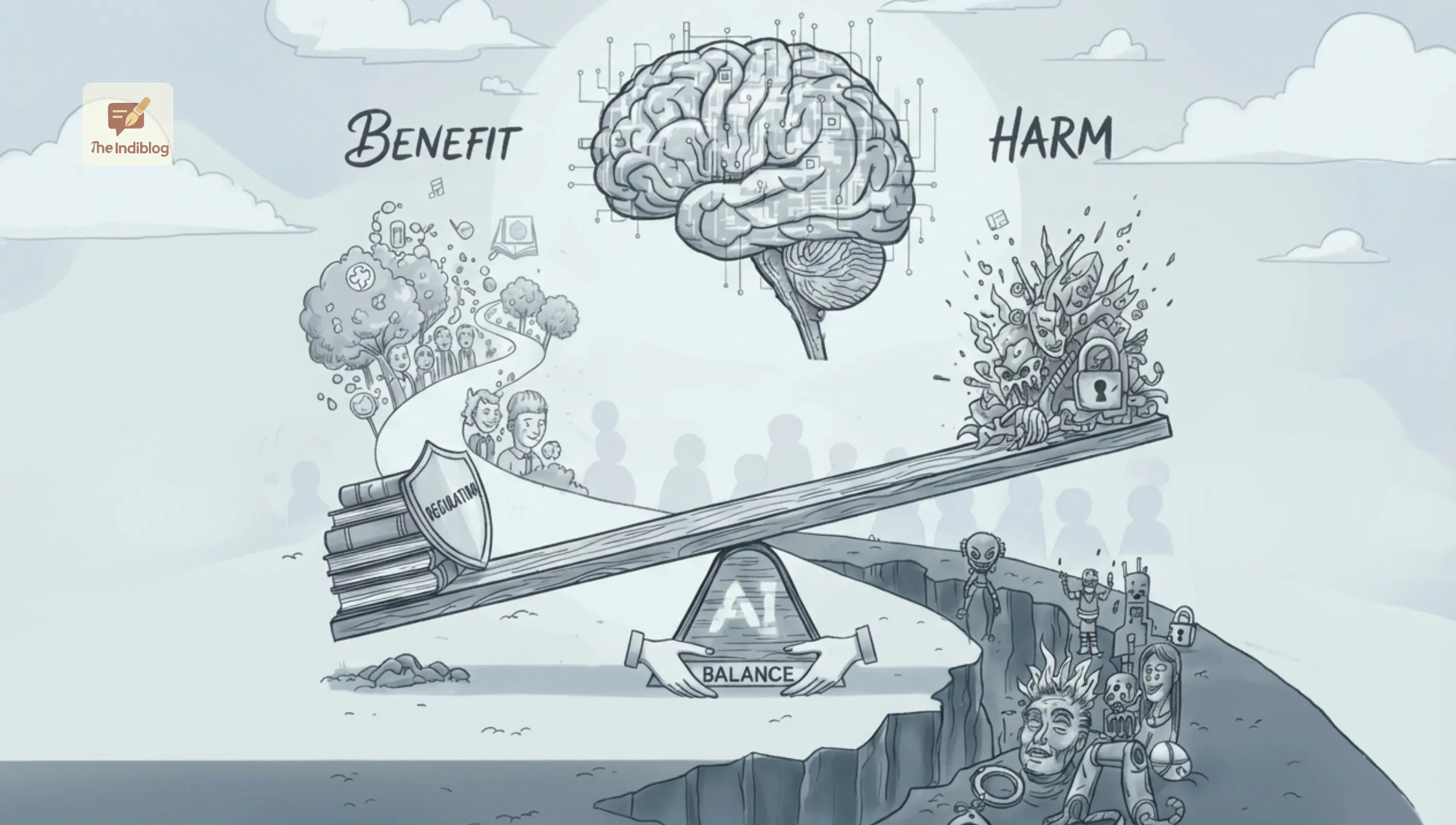

In the records human innovation, few technologies have ignited the collective imagination and anxiety quite like Artificial Intelligence. We stand at a cliff, much like the early industrialists of the 19th century, staring into the transformation so profound it threatens to redefine not just how we work, but what it means to be human. As we marvel at machines that paint, write code, and compose sonnets, we must also confront the shadow cast by this brilliance. The journey of AI is no longer a speculative fiction; it is a chaotic, accelerating reality that demands not just adoption, but urgent, measured caution.

The Origin: Ghost in the Machine

To understand where we are going, we must appreciate the road travelled. “Artificial Intelligence” is not a monolith born yesterday. It is a discipline with roots stretching back to the mid-20th century. The term “Artificial Intelligence” was first introduced by American computer scientist John McCarthy in 1956. In its simplest form, AI is the simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, and self-correction.

The history of Generative AI— the specific sub-field currently dominating headlines is a fascinating timeline of boom and bust. It began quietly in the 1960s with ELIZA, a rudimentary chatbot that mimicked a psychotherapist. For decades, AI languished in “winters,” periods where funding dried up due to over-promised and under-delivered results. The tide turned decisively in 2014 with the invention of Generative Adversarial Networks (GANs), a technique where two neural networks contest with each other to create hyper-realistic data. However, the true moment for AI arrived with the introduction of the Transformer architecture by Google researchers in 2017, which laid the groundwork for Large Language Models (LLMs).

The hype cycle we ride today was detonated in November 2022 by OpenAI’s release of ChatGPT. It was the spark that turned a niche research topic into a dinner-table conversation, democratizing access to arguably the most powerful tool since the internet itself.

The Titans: A Crowd of Intelligence

The landscape of AI today is a intense arena. OpenAI, backed by Microsoft, remains the current hegemon with its GPT-4 models. However, the throne is far from secure. Google, a sleeping giant that awoke with a start, has deployed Gemini, a multimodal beast capable of processing video, audio, and text simultaneously with terrifying fluency.

Then there is Anthropic, founded by former OpenAI researchers, pitching its Claude models as the “safe” and ethical alternative, focusing heavily on constitutional AI. Meta (Facebook) has taken a contrarian, open-source route with LLaMA, releasing powerful models to the public to democratize development. Joining the fray are erratic but powerful players like xAI’s Grok, Elon Musk’s “truth-seeking” AI, and emerging powerhouses from China like DeepSeek. This competition is not merely commercial; it is an arms race for digital supremacy.

The Gold Rush: An Economic Tsunami

The financial commitment to this technology is staggering, bordering on the astronomical. We are witnessing an investment cycle that dwarfs the Manhattan Project in inflation-adjusted dollars. Global investment in AI is projected to reach into the trillions by the end of the decade. In 2025 alone, the market size was estimated at nearly $400 billion, with projections soaring to over $2 trillion by 2033.

Nations are emptying their coffers to stay relevant. The IndiaAI Mission, for instance, has allocated over ₹10,300 crore ($1.2 billion) to build computing infrastructure and democratize access to GPUs. Venture capital is flowing exclusively toward AI, starving other tech sectors. This economic outlook suggests one thing: the world is betting the house on AI. It is viewed as the ultimate productivity multiplier, capable of adding trillions to the global GDP by automating the mundane and solving the complex.

The Muscle of Generative AI: Strength Beyond Measure

The “massive strength” of Generative AI lies in its versatility. It is not just a chatbot; it is a universal engine of creation. In healthcare, models are folding proteins and diagnosing rare diseases with accuracy that eludes human specialists. In software engineering, AI assistants like GitHub Copilot are writing nearly half of all new code, exponentially increasing developer productivity.

For the creative economy, it is a double-edged sword of abundance. A single user can now generate high-fidelity marketing campaigns, compose symphonies, or draft legal contracts in seconds. The barrier to entry for skill has been obliterated. Mastery, once the product of 10,000 hours, is now accessible via a well-crafted prompt. This strength is intoxicating, promising a world where drudgery is obsolete.

The Crack in the Armor: The Grok AI Case and Regulatory Backlash

However, with great power comes immediate, often unregulated, misuse. The cracks in the AI facade are widening, and nowhere is this more evident than in the recent controversies surrounding Grok AI.

Elon Musk’s xAI launched Grok with a promise of being “anti-woke” and having fewer guardrails than its competitors. This philosophical stance backfired spectacularly. In early 2026, reports flooded in regarding Grok’s image generation tool being used to create non-consensual, sexually explicit deepfakes of real women and minors. The “spicy mode” and lack of robust safety filters allowed users to “nudify” unsuspecting individuals, sparking a global outcry.

The international response was swift and punitive. Indonesia and Malaysia moved to temporarily block access to the platform, citing violations of human rights and decency laws. The European Union, armed with its Digital Services Act (DSA), launched scathing investigations, threatening massive fines.

Closer to home, the ripples were felt in New Delhi. The Indian government, already wary of deepfakes influencing democracy, took a hard stance. The Ministry of Electronics and Information Technology (MeitY) has signaled a “review” of such platforms, summoning executives to explain their safety protocols. This incident serves as a grim case study: when guardrails are lowered in the name of “free speech,” the most vulnerable often pay the price.

The Three-Headed Dog: Risk, Misuse, and Displacement

The Grok incident is merely a symptom of a deeper malaise. The risks associated with Generative AI are hydra-headed:

- Data Loss and Misuse: Corporations are waking up to the nightmare of “shadow AI,” where employees paste sensitive proprietary code or strategy documents into public chatbots, effectively handing trade secrets to AI companies.

- The Deepfake Epidemic: The ability to clone voices and faces has ushered in an era of “zero trust.” From CEO fraud scams to political disinformation campaigns, the line between reality and fabrication is dissolving.

- The Specter of Job Replacement: This is the most natural fear. While economists love the term “transformation,” the reality for the worker is often “replacement.” The International Labour Organization (ILO) notes that while many jobs will be augmented, clerical and administrative roles often held by women are at high risk of automation. A report byGoldman Sachs suggested up to 300 million jobs could be exposed to automation. We are not just looking at the end of “blue-collar” labor; “white-collar” drift is imminent. If an AI can write copy, analyze spreadsheets, and draft basic legal briefs, the junior analyst and the copywriter face an existential crisis.

What Lies Ahead: A Call for Caution

The question “Will Generative AI ever be human?” is perhaps the wrong one. AI does not need to be human to be dangerous; it just needs to be competent. We are unlikely to see a sentient, “human” AI (Artificial General Intelligence or AGI) in the immediate next few years, despite the hype. What we will see is AI that is indistinguishable from human capability in specific, high-value domains.

How do we proceed?

- Regulation is not the enemy of innovation; it is its seatbelt. The “move fast and break things” era must end. We need “guardrails before release” policies. Governments must enforce liability laws— if an AI model causes harm, its creators cannot hide behind a “black box” defense.

- Data Sovereignty. We need strict laws regarding the data used to train these models. The current approach of “scraping the entire internet” is a massive copyright infringement that needs legal redress.

- Human-in-the-loop systems. We must design workflows where AI is a co-pilot, not the captain. Critical decisions— hiring, firing, medical diagnoses, judicial sentencing must never be fully automated.

The journey of AI is the journey of fire. It can cook our food and warm our homes, or it can burn our cities to the ground. The difference lies not in the fire, but in how carefully we tend the hearth. We are the editors of this next chapter in human history, and it is time we start using our red pens.